In this series of blog posts, I want to talk about the CQRS/ES (Command Query Separation with Event Sourcing) architecture pattern and specifically how this architecture pattern can be used in a legacy system.

The essence of CQRS is simple: where before there was only 1 service there are now 2: 1 service optimized for writing the other for reading.

This idea stems from the insight that in most applications data is being read much more often than it is written. CQRS allows you to scale for these 2 perspectives differently. 1 concrete example is ORMs and more specifically their lazy loading feature, this is typically a very useful feature when writing data but not when reading it. If for example, you want to generate a report in a performant and efficient way it is often better to write a normal SQL query and just get the data that you need (without using an ORM and without lazy loading).

ES is an extension on the idea of CQRS and goes 1 step further: By modelling the domain model on the write-side of an application as a stream of events. Instead of a “static” relational database model that changes with every update to the data (typically with the aid of an ORM) you rebuild the domain model after every change by replaying all the events for a given aggregate (in the correct order!). By doing this you reconstruct the current state of the aggregate in-memory after which you can apply new changes that lead to new events.

In this blog series, I want to focus on the why and the how of applying this architecture pattern in a legacy application (a big monolith that has been running successfully in production for many years)

When I talk about ES in this series I specifically talk about ES in the context of DDD, ES in this context is focused on modelling a business domain as a series of events. In this context, ES is completely independent of any specific technology. A lot of people think about Kafka when they think about Event Sourcing and in the context of IoT devices that send out events or processing data by using event streams, Kafka is definitely a relevant product.

However, for the type of Event Sourcing, I am talking about here, Kafka is not suited, we need a form of optimistic locking to make sure only events that have been validated by the current state of the domain model are being written to the event store (at the time of writing this is not possible in Kafka and after years of waiting for support for it, chances are slim that this feature will be added any time soon)

Context is king

One of the most important principles of DDD is that “context is king”, because of that, every technique needs to be evaluated in the specific context in which you are working. There are no “silver bullets” so you always have to evaluate what is the most appropriate solution for your specific context (this is very hard!).

Without going into too much detail I will try to describe the situation we found ourselves in a during a certain JIDOKA project.

Below is a short summary of some of the observations we made of the existing software (AS-IS):

- The existing system was +- 4 years old and was relatively large in terms of the amount of code. (In comparison to the customer’s other custom software)

- The architecture was a classic layered monolith, which consisted of several modules with a small number of different web applications and 1 large shared database.

- Most of the software was written in Java (Spring stack + Hibernate) with Oracle Database as RDBMS. Additionally, a significant portion of the software was written in PL/SQL.

- There were a lot of batch jobs running at night / during the weekend to generate certain KPI reports & perform certain validations.

- We were given 1 year to rewrite a large part of the system, mainly for 2 reasons:

- One big business epic/feature that translated to lots of changes and new functionality throughout the system.

- The quality of some parts of the system was very low (e.g. reports that were never correct, some screens that were very slow, usability issues, etc.).

- The performance of the system was generally good except for some KPI reports that were very slow (loading 1 report could easily take 5 minutes!).

- The scalability of the system was acceptable, although it was limited due to the monolithic architecture.

We started this challenging project with a strategic DDD exercise to create a context map of the existing situation. This took about 2 to 3 days and in retrospect, it was a very valuable exercise, by looking at the business context at this high level and how the existing software fit in, we were able to choose our battles and focus on the parts that belonged to the core domain.

In this specific project, we came to the conclusion that there were about 10 subdomains in the business domain of which we considered 3 subdomains as “core domains”. For all 3 core subdomains software was written in the monolithic legacy system (no separate bounded contexts). With the assignment we got we wanted to focus on 1 specific core subdomain, in an ideal world we would build a new application with its own bounded context and domain model but in the real world, we had to look for a suitable technical solution within the constraints and deadlines of the project.

The right tool for the right job

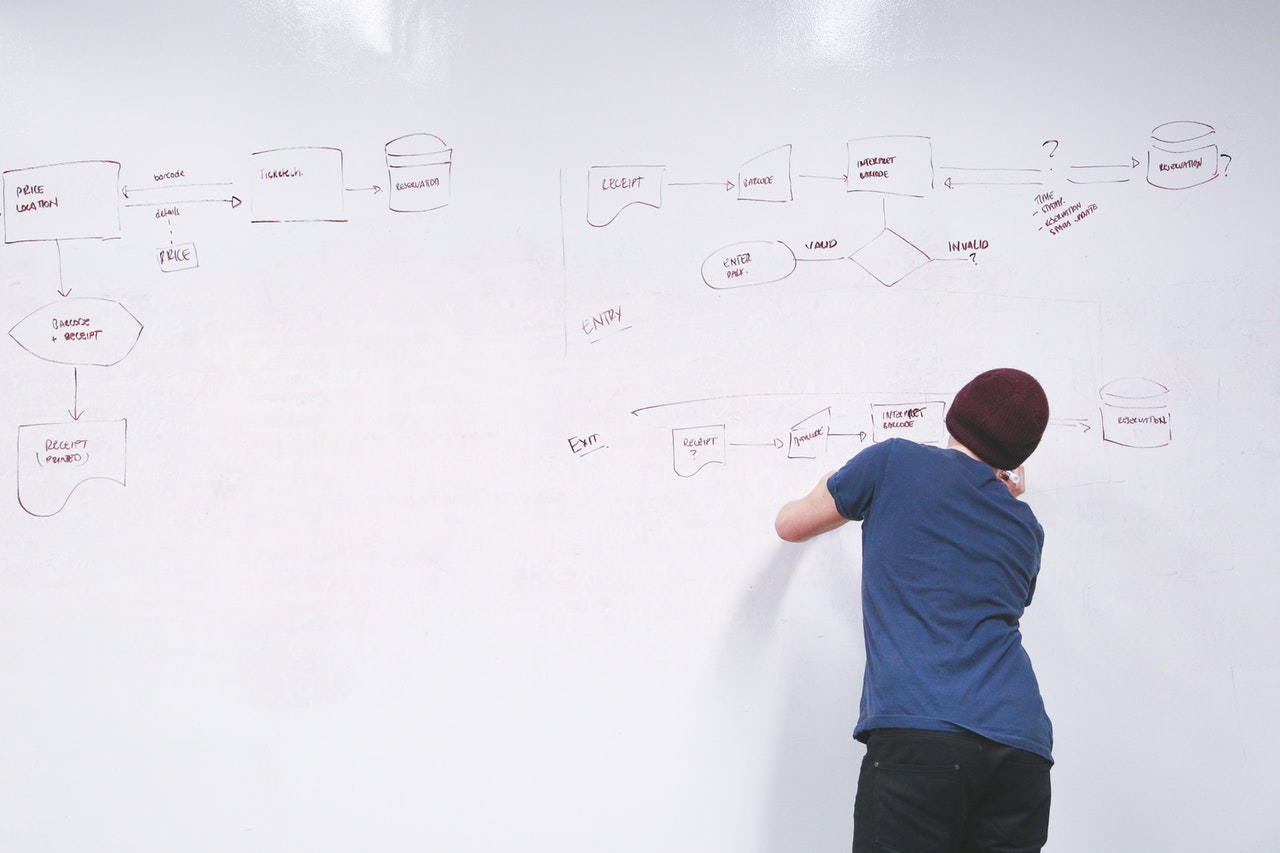

It soon became clear that implementing the most important core domain in its own bounded context was not feasible within the constraints of the project. What was possible, however, was to remodel the most important aggregate within the system. One of the main technical constraints here was that the existing database table (with about 100 database columns!) was read by many different pieces of software (both via Hibernate in Java code and directly in PL/SQL code). So the options to re-model this aggregate initially seemed limited.

After some brainstorming and Hammock Driven Development we organized a spike in which we wanted to try if ES was a suitable solution for this part of the application, the reasons why we wanted to try this were the following:

- The aggregate in question was read much more often than updated → CQRS seemed to fit well here

- The aggregate already had a “poor man’s version” of event sourcing, the Hibernate entity was (partially) versioned with Hibernate Envers → Auditing was already very important in the existing software, by using ES you automatically get an audit log of everything that happens to a certain aggregate.

- The aggregate in question was the main reason that the KPI reports of this application were so slow (even after they had already been largely pre-generated with batch jobs and visualized with materialized views) → in an ES approach, each report could be its own projection (read model), a kind of materialized view on steroids.

- The aggregate itself contained a lot of information related to time, for example, there were several users who had to modify information on the aggregate, for each type of modification a separate date field was kept in the database to know afterwards when which dates had changed when → in the existing software “time” was an important concept, something that is very natural in an ES approach.

- The different users who were responsible for adjusting data on the aggregate did this independently of each other, they never needed the latest up-to-date information from each other, it was already natural to work on slight stale data (because they often worked in different time zones and with different business hours) → 1 of the disadvantages of ES is that the system must become eventually consistent, in the existing application this was already the case in a natural way, within the business context, and therefore not a real constraint.

All these factors led to the conclusion that applying ES to this specific aggregate seemed to be a good match for the problem at hand.

We doubted whether we could use the ES pattern for other aggregates as well but in the end, we decided not to do so, the complexity of that solution would outweigh the benefits for most other aggregates.

In the next blog post, we will dive deeper into the technical details and how we applied CQRS/ES in this legacy system.