A long time ago in a JIDOKA office space not so far, far away, a certain idea came to live after an interesting discussion with the JIDOKA DevOps team. The idea consisted out of the possibility to build a Kubernetes cluster on Azure with the sole purpose of acting as a test environment for some of our internal applications. Not the biggest and most creative idea you might think, but we remembered that we have a centralized Sentry application on our Elastic Kubernetes cluster running. The Sentry application is used to collect logs of our production applications and to notify our developers if any new unknown bugs come up. Now, our test environment is also continually used by developers to test out new features and version upgrades of certain components.

It would be helpful to those developers if they also could see the logs of the test applications to make sure if they are ready to leave the incubation period and turn into butterflies, or production applications, whatever you prefer.

Beware of the big bad internet!

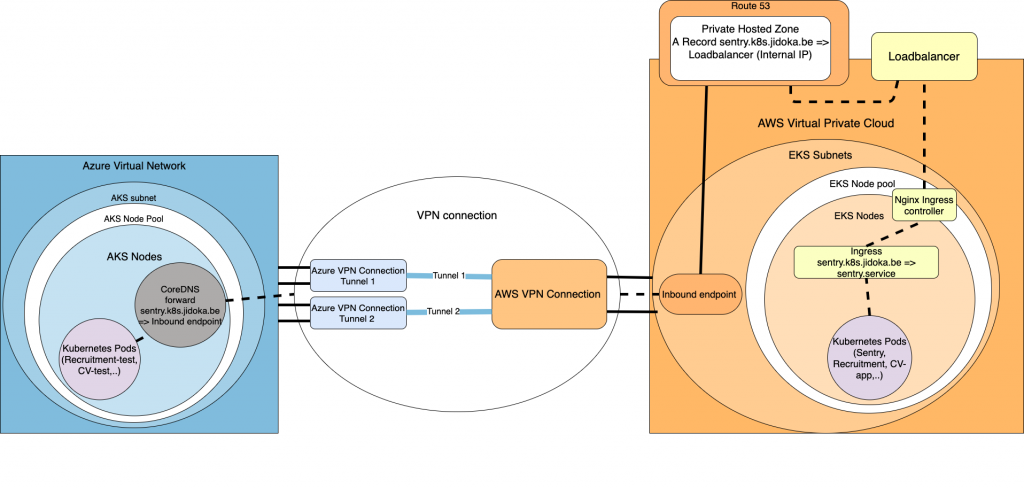

The biggest caveat that comes into play is security. The logs might contain sensitive data which we don’t want to send to Sentry through the internet. The solution for this would be to connect the Azure Kubernetes cluster with the Amazon Kubernetes cluster through a redundant VPN connection. With this approach, we have a safe way to route sensitive data between two Kubernetes clusters. The first use case we found were the Sentry logs but this could expand further into retrieving Vault secrets, using database connections, sharing resources such as Elasticsearch, the list is endless.

D to the N to the S

After managing to connect both of the virtual cloud networks together, we are still left with the issue of DNS. The life cycle of Kubernetes pods is often the same as one of a mayfly, which is the shortest living animal on earth with an average lifespan of 24 hours, now don’t say you’ve learned nothing by reading this blog. This makes routing by IP not possible as they often get renewed. Knowing that all of our services operate under a specific hostname, we can configure the DNS solution on the Azure cluster to forward specific requests to the Amazon cluster, let them handle the DNS resolving, and wait for a reply with the correct route.

Route53 to the rescue!

A first step to realise that, would be to add a DNS forward lookup zone in CoreDNS. CoreDNS is the standard DNS server that is used by most Kubernetes clusters. In the lookup zone, we define the hostname of the service we want to reach on the Amazon cluster. But to where does this lookup zone forward the requests to? This is where Route53 comes to save us all. Through the use of Route53, we can define a private hosted zone and link it to the VPC of the Amazon cluster. This private hosted zone will only act as a service to point of all of the requests that come from the Inbound endpoint, to the loadbalancer of the cluster. To reach Route53 for DNS resolving, we will create an Inbound endpoint in the same subnet of the Amazon cluster. This inbound endpoint will catch all of the incoming requests that come in through the VPN tunnel and let the private hosted zone handle them. Don’t forget to let the Inbound endpoint create an IP in each subnet of your Amazon cluster.

To connect the DNS server we have on Azure to the inbound endpoint, we define the forward lookup zone to forward all of the requests for our hostname, to the IP’s of the inbound endpoint. Now that all of our requests get forwarded to the internal IP of the loadbalancer eventually, they get passed on to the Ingress controller and resolved through the Ingress to the correct service. This all might seem a bit complicated but hopefully the following picture can help you comprehend the flow. In this example, we only forward the sentry hostname to the Amazon cluster. In theory you could put a wildcard hostname in the CoreDNS configuration, and one the private hosted zone as an A record, to resolve more hostnames of the Amazon cluster.

Difficult difficult lemon difficult

If you were wondering, why we are going through all of this trouble rather than sticking with two Amazon clusters instead? That way we would have less issues with routing and DNS resolving. First of all, the knowledge gained from utilizing products of other cloud providers gives us the possibility to broaden our expertise and provide more options for our clients.

Second of all, we at JIDOKA are not afraid of a challenge and see this as a learning opportunity instead of a detour for our problem. Besides, some of the features and products of Azure are interesting for our use case, one of them being their loadbalancer implementation. The integrated cost saving mechanism of Azure also allows us to have a smaller and more cost-effective test environment.

Was it worth it?

It didn’t cost everything fortunately, a good amount of research combined with the correct can-do mentality is enough to reproduce this environment. The experience gained from this challenge made the struggles and many head scratches worth the while.